In 2010, I attended two meetings with Facebook staff over how the service could help prevent suicides. One meeting was at the company’s headquarters and was attended by representatives from several tech companies, including Yahoo and Google. The session explored industry-wide approaches to the issue. The other meeting, at Lucy Stern Community Center in Palo Alto, included local officials, educators and parents, and it focused on a rash of local teen suicides, none of which was related to Facebook.

At the time, there were few online resources available to tackle suicide and self-harm. Facebook did respond when it was made aware of a problem, but there weren’t systems in place to deal with the issue at scale. I recall one instance around that time when my nonprofit, ConnectSafely.org, received a note from a depressed young man in the Mid West who was contemplating self-harm. I forwarded that note to a high-ranking Facebook executive who personally took immediate action and arranged for an intervention by emergency response workers in the young man’s community.

The announcement focused on new Facebook tools for helping people “in real time on Facebook live,” facilitating live chat support from crisis support organizations via Facebook Messenger and “streamlined reporting for suicide, assisted by artificial intelligence. There have been reports of people, including a 14 year-old Miami girl, who have broadcast their suicides via the Facebook Live video streaming feature.

Facebook said that it already has 24/7 teams in place to review reports and prioritize the most serious, like suicide. The company also has been providing people who express suicidal thoughts with support, including advising them to reach out to a friend or a support organization. The company has worked with psychologists and support groups to recommend helpful text that you might use if someone you know on Facebook is expressing suicidal thoughts.

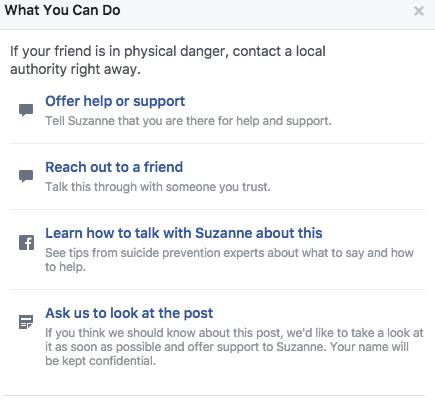

To the right of each Facebook post is a down arrow. If you click it you get options including “I think it (the post) doesn’t belong on Facebook.” Clicking that takes you to an option for posts that are “threatening, violent or suicidal,” and if you click that you’re taken to an area where you can get advice, including specific language if you want to offer help or support.

To the right of each Facebook post is a down arrow. If you click it you get options including “I think it (the post) doesn’t belong on Facebook.” Clicking that takes you to an option for posts that are “threatening, violent or suicidal,” and if you click that you’re taken to an area where you can get advice, including specific language if you want to offer help or support.

For example, if you are concerned about your friend Jesse, the app will suggest you write “Hey Jesse, – your post really concerned me, and I’d like to help and support you if you’d be open to it.” That same area on the site or app also allows you to send a text to a trained counselor at the Crisis Text Line, call the Suicide Prevention Lifeline (800 237-8255) or confidentially report the post to Facebook so that their personnel can “look at it as soon as possible.”

Now these tools and other resources will be integrated into Facebook Live. Anyone watching a video will have the option to reach out to the person directly and to report the video to Facebook. Facebook is also providing resources to the person reporting to help them help their friend. People who are posting a live video will also see resources encouraging them to reach out to a friend, contact a help line or see tips.

One concern I have about the current reporting process is that finding the screen where you can report a potential suicide is not as intuitive as it could be. I’m not sure exactly how they plan to fix this, but in Wednesday’s announcement Facebook said that it’s “testing a streamlined reporting process,” and said that “this artificial intelligence approach will make the option to report a post about ‘suicide or self-injury’ more prominent for potentially concerning posts.”

The company has also produced an educational video http://tinyurl.com/FBselfharm that provides useful tips on how to respond if a friend is “in need.” Everyone should watch this 48 second video, especially young people who are most likely to know someone who may need this type of support.

As Facebook pointed out when announcing these new tools, “there is one death by suicide in the world every 40 seconds, and suicide is the second leading cause of death for 15-29 year olds.” Of course, one reason that’s true is because younger people are less likely to die of other causes, but – whatever the statistics – any suicide is tragic

I’ve been told by suicide prevention experts that intervening in a suicide can have lasting effects. A person who contemplates or even attempts suicide may very well be dealing with a problem that will pass or diminish over time. In situations like these, it’s critical to get them the help they need to cope with or solve the problems that are plaguing them. The Centers for Disease Control reports that 9.3 million U.S, adults (3.9 percent) reported having suicidal thoughts in the past year, based on a 2013 study. There were 41,149 suicides in 2013 so the overwhelming majority of those who think about it or even attempt it do not carry it out.

Being an online friend means more than just liking and commenting on posts. It means looking out for each other and acting when there is something we can do to help our friends not just survive, but thrive.