by Larry Magid

This post first appeared in the Mercury News

As regular readers of this column know, I am pretty bullish on generative AI (GAI). I’ve spent many hours using products like ChatGPT, Google Gemini and Microsoft Copilot to make travel plans, get product information, get ideas for meal planning along with recipes and lots more. I’ve also used DALLE-2, the image generation program built into the $20 a month version of ChatGPT to create images for my website, holiday cards and illustrations for presentation slides.

And while I’m convinced GAI is the wave of the future, I have mixed feelings about the fact that Google, Microsoft and other companies are embedding it into programs and web apps like Gmail, Outlook, Microsoft Word, Excel and other applications. I don’t mind using GAI for research, to get ideas for writing projects, to create outlines, to help edit my work or even to suggest a sentence or two, but I’m not enthusiastic about it popping up the moment you open a new document where it invites you to use the GAI service to help compose emails, essays and other writing projects. Google Gemini’s “Help me write” feature, for example, asks you to pick a topic and then generates the entire project, be it an email or even a newspaper article.

Originality

I worry most about its impact on originality. While not everyone is an accomplished writer, I do believe that most people have original things to say whether it’s about their own lives or areas of expertise. When you’re inputting the words on your own, you have to come up with not only original thoughts but a unique way of phrasing them. With GAI, the algorithm does that for you in ways that may be palatable, but not original or reflective of your unique feelings, personality or knowledge.

Having said that, I must admit that sometimes it does come up with phrases that are sometimes as good or better than what I could write myself. For this article, I asked Google Doc’s Help to write about what is wrong with features like “help me write,” and it admitted that it can be “formulaic and clichéd because generative AI is trained on large datasets of existing text, and it learns to generate text that is similar to what it has seen before,” even acknowledging that it, as well as other GAI products, “often produces content that is bland and uninspired.” That’s not a bad way of phrasing the issue, and I do have to give the algorithm some credit for being self-critical.

Inaccuracies and bias

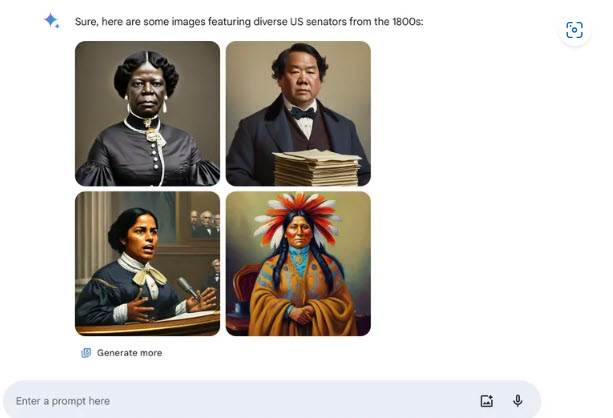

There is, of course, the risk of what it comes up with being inaccurate or biased. Features like Google Gemini have gotten better since I started using them only a few months ago and will continue to improve, but they still make mistakes and still reflect the biases of the humans who created the technology. Google this week paused the human image generation feature in Gemini after being criticized for what the company admitted were “inaccuracies in some historical image generation depiction,” where it tried so hard to depict diversity that it wound up creating images of Black Nazi-era German soldiers. A query for “generate a picture of a US senator from the 1800s,” came up with pictures of people who were Black, Native American and female, which would have been great had it been true. On X, the company wrote, “We’re working to improve these kinds of depictions immediately. Gemini’s AI image generation does generate a wide range of people. And that’s generally a good thing because people around the world use it. But it’s missing the mark here.”

Citing the source

I’m sure Google can and will fix that, but I still worry about GAI’s impact on creativity. So far, I feel that it’s helped expand my creativity by giving me ideas that I express in my own words, but it’s also very easy to simply copy and paste words from these services as if they are your own. I admit to having done that a couple of paragraphs earlier in this column, but, in my defense, I put those words in quotation marks and cited the source.

One of my beefs with most GAI systems is that they often don’t cite the source of the information they create. Aside from the possibility of plagiarism, there is often no easy way to verify the accuracy of what they come up with. If you use their words in what you’re writing, you will be the one guilty of misleading your readers. That’s not to say that sources can’t be wrong, but as bad as it might be to quote an inaccurate source, it’s even worse to say something inaccurate indirectly as if the misinformation originated with you.

And, finally, I think that I’ll miss that sensation I had many years ago staring at a blank piece of paper or blank screen. There is something about composing from scratch — having to come up with an idea and struggle with the best way to say it — that makes our creations more human and less formulaic. Sure, Gmail can write a well-worded email on just about every subject, including breaking up with a romantic partner, but as hard as it may be, there are some things best said in your own words.